Since 2018 I have been working at Microsoft. First contracting, then full time.

One area of my contributions was to Mixed Reality Toolkit, an open source set of tools for developing HoloLens & Augmented Reality applications in Unity.

Leading up to the release of the HoloLens 2, Microsoft kept an internal version of MRTK. Most of my work (like the Pressable Button, speech improvements) were made here. Other work included updating older MRTK functionalities and chasing down bugs.

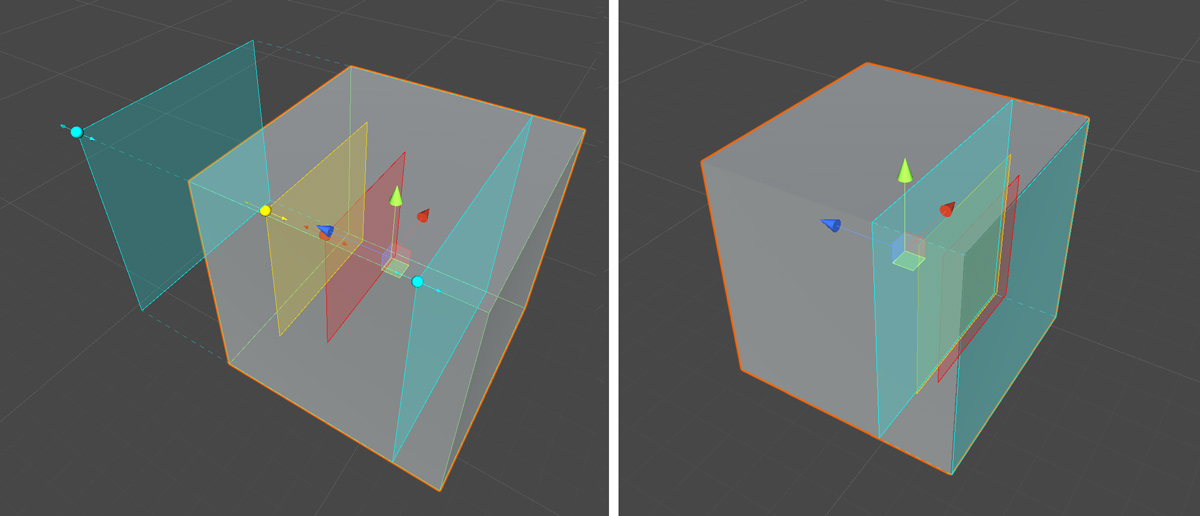

Pressable Buttons

HoloLens 2 introduces near interaction with your hands. This means you can reach out and press a button in front of you.

This has high expectations for feedback, visual conveyance and implementation flexibility.

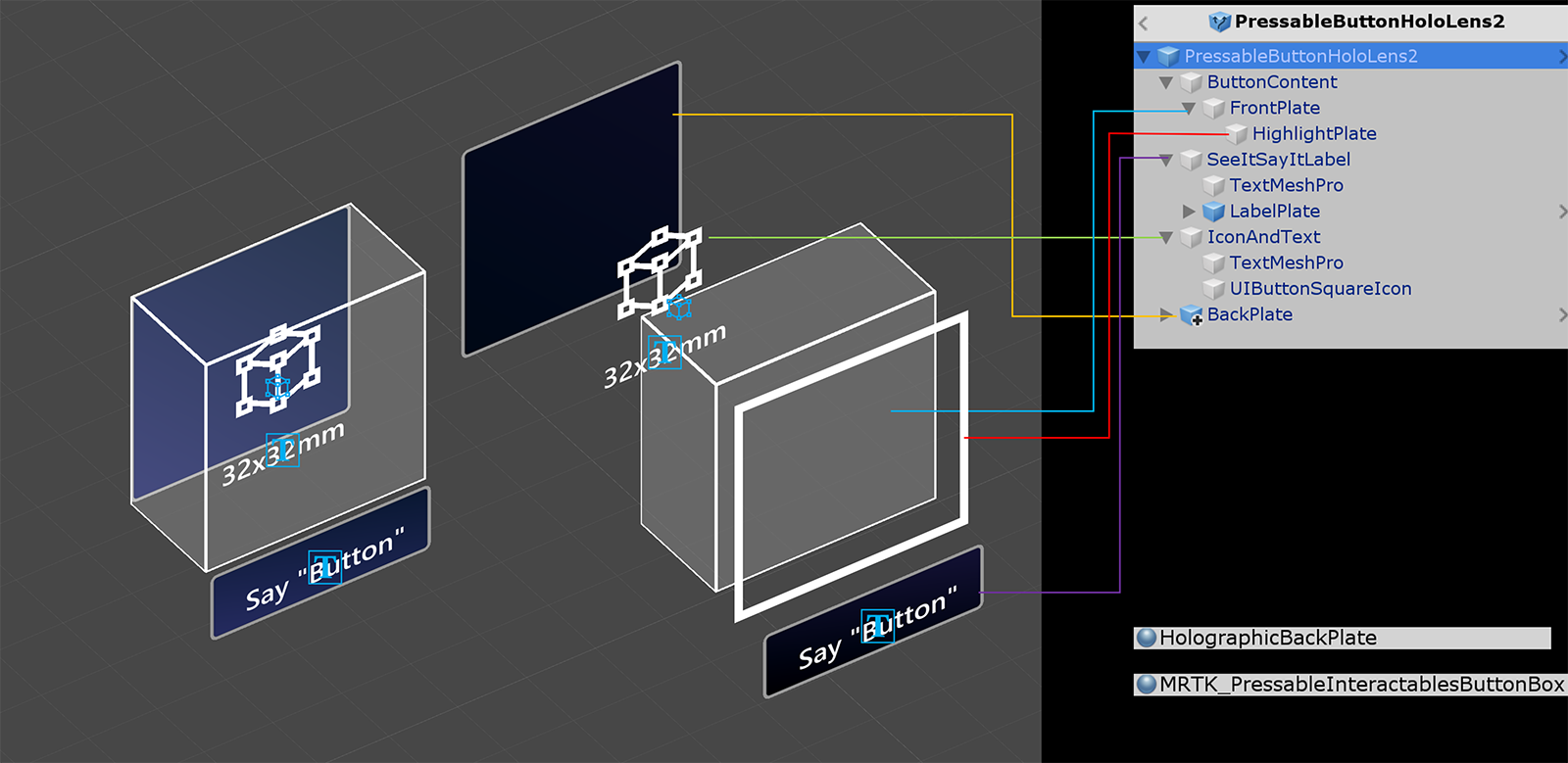

The Hierarchy of a Pressable Button supports a number of features.

- Support for static/non-static content: Content can either move or remain while pressing the button

- Compressing button support: So the button actually gets slimmer as you press it.

- SeeItSayIt Label: Appears when hovering your finger or a cursor over the button

- Finger Proximity Effects: Highlight Plate will illuminate when finger is near.

- Press Glow Pulse: When you press the button, it provides a visual pulse across the Highlight Plate

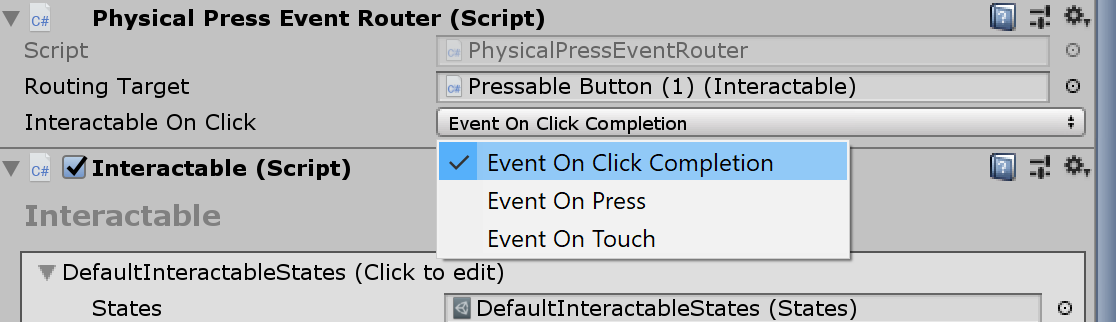

- Event Router: An abstraction layer for defining how you want a button to consider a click. It fires events into the Interactable component.

- Backpress Detection: developers often want to avoid false positives, such as approaching a button from behind

- Rapid Press Detection: buttons can respond to relatively fast hand movement, allowing for responsive buttons

There are many adjustable ranges that define a good Near Interaction Button. I tried to provide developers with a wealth of controllable options, while still allowing them to replace sections that wholly do not fit their broadly varying potential needs.

The Operating System of HoloLens served as an original source for how Pressable Buttons should look and function. HoloLens’ Operating System is not in Unity, which meant a separate Unity implementation was necessary. As I built my implementation, it served as the prototyping ground under regular review by the design leadership who wanted to improve the feedback, responsiveness and fidelity of HoloLens’ buttons. These reviews and my prototyping efforts resulted in both implementations evolving in tandem.

It felt great to contribute such a foundational control mechanism to the toolkit hundreds of enterprise companies will use!

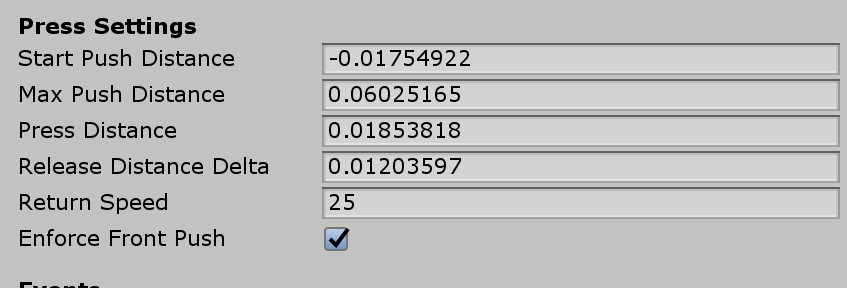

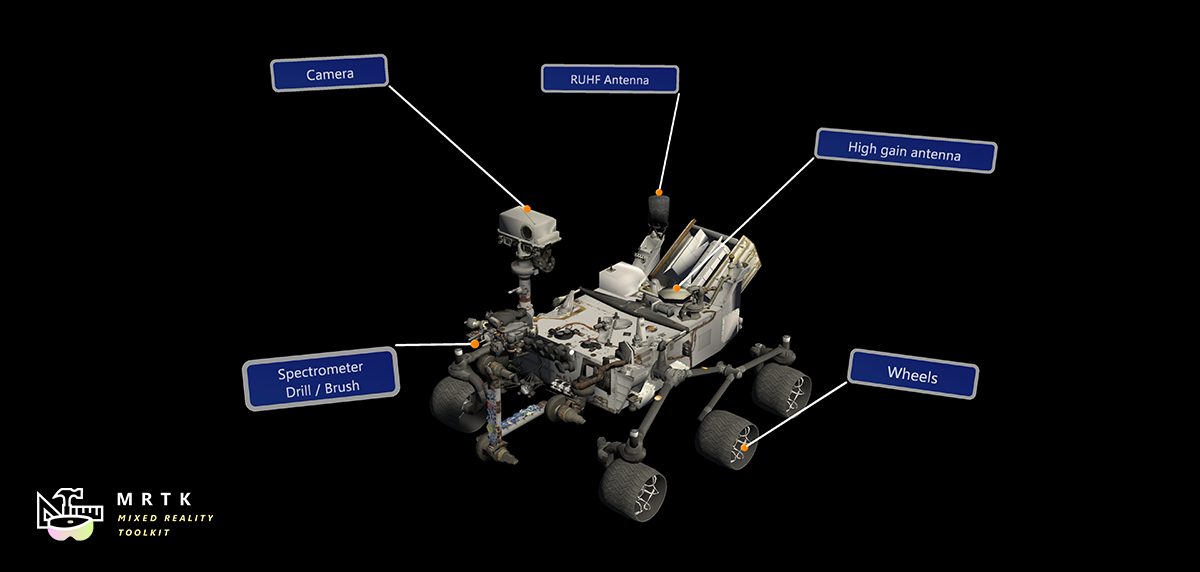

Tooltips

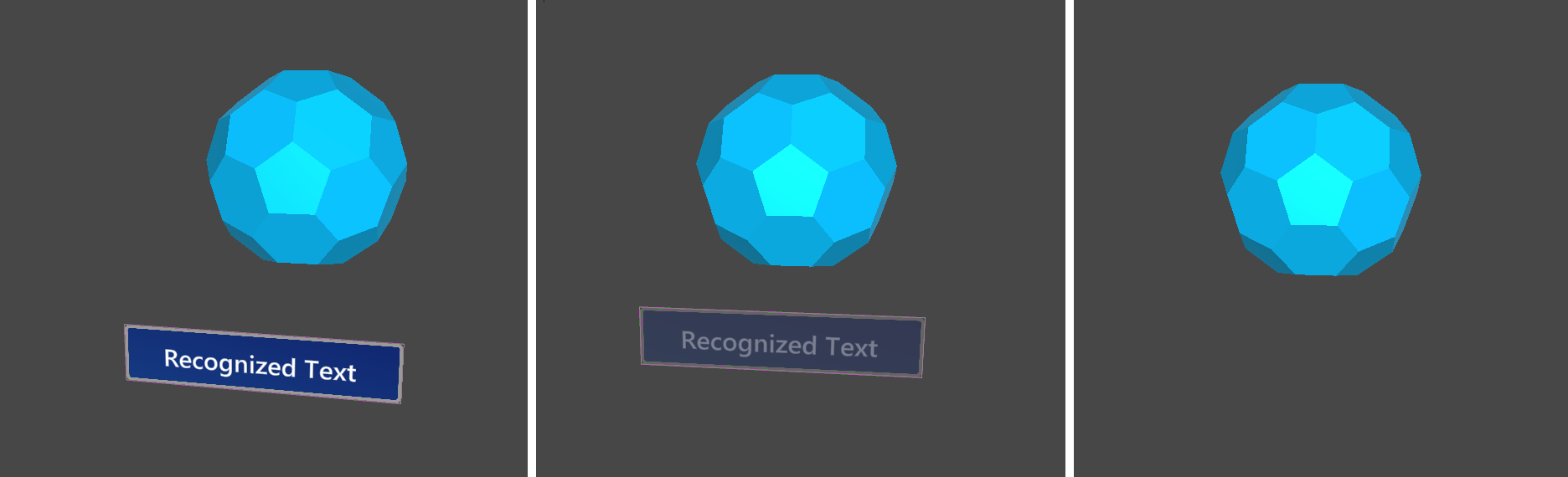

Tooltips were a feature from MRTK V1 that I ported and upgraded for the internal MRTK vNext.

A common enterprise use case was the need to label elements while having precise control over the location of the label as well as the connecting line.

MRTK vNext introduced LineDataProviders, which could define the placement behavior for the connecting line. A portion of my work was adding support for these and chasing down the bugs introduced by the wealth of options from the new data providers.

Another new feature is the idea of DistortionModes, which could modify incoming line data, allowing the tooltip’s line to move or animate, drawing the user’s attention to a particular label and the labeled object.

Speech

Another portion of my work for HoloLens Tips was towards a portion of the speech features.

Speech is a key modality for AR, especially in hands-free enterprise scenarios.

I aided in refining the localization system, the action routing as well as building many prototypes for future hardware.